Summary Process

- ✔️ Create a new project on Google Developers API Console

- ✔️ Go to Enable APIs and Services and enable Google Drive

- ✔️ Create credentials for a desktop app

- ✔️ Install gupload and run it with the credentials provided

- ✔️ Create a cron job

Introduction

I wanted a bash script that automatically backs up my database to google drive as a zip file. I went looking and finally landed on the perfect one for the job. Here is how you can also do it.

Steps

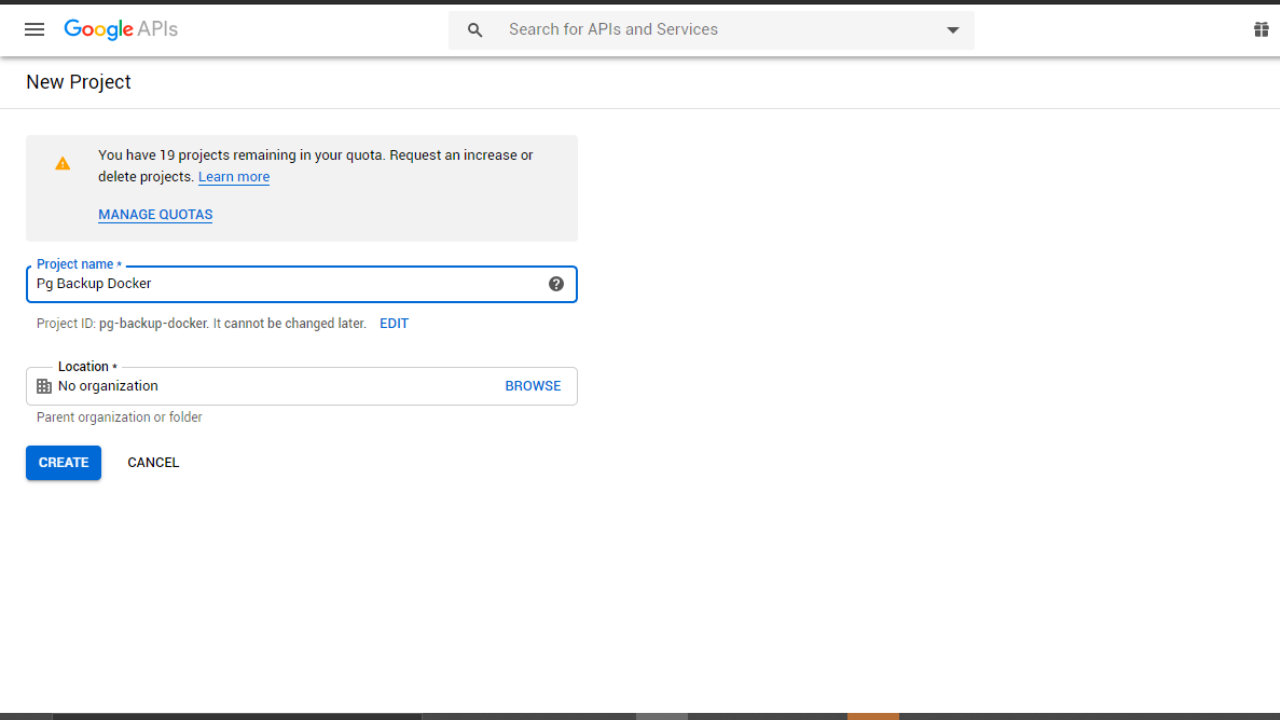

✔️ Step one: create a new project on Google Developers API Console

Go to Google Api Console. Create a Project and call it anything. That is, if you don’t already have a project.

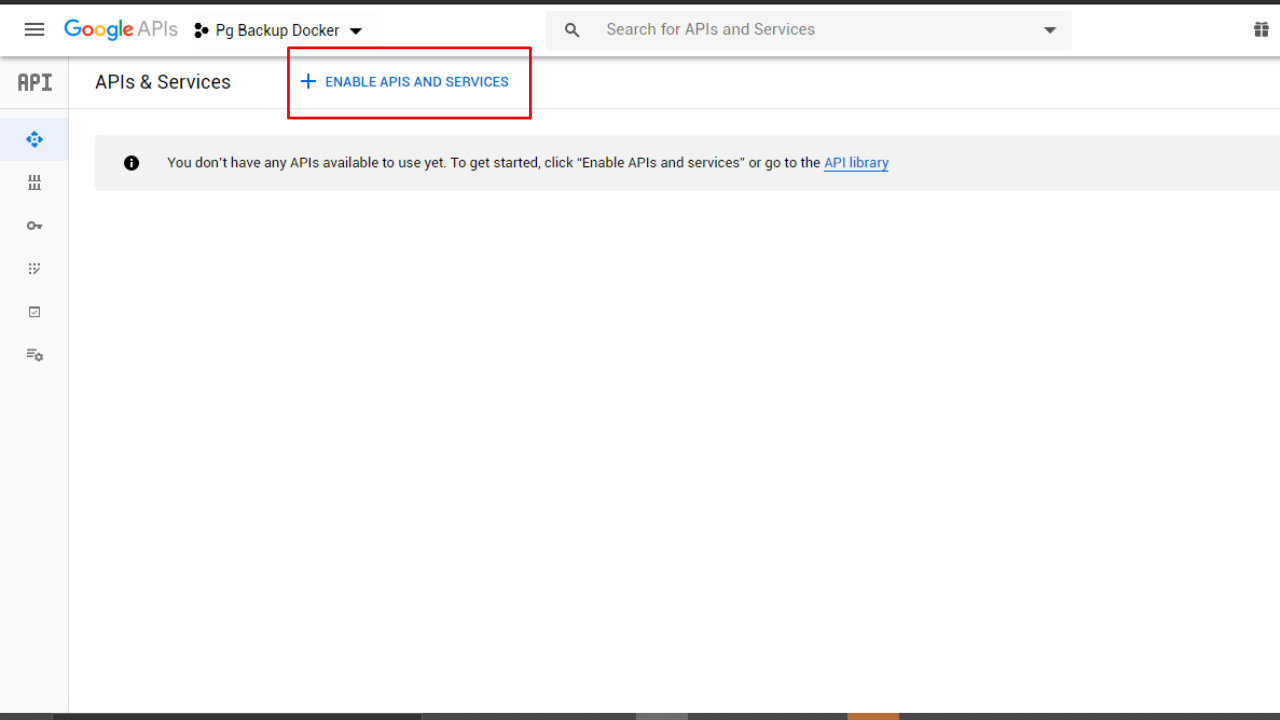

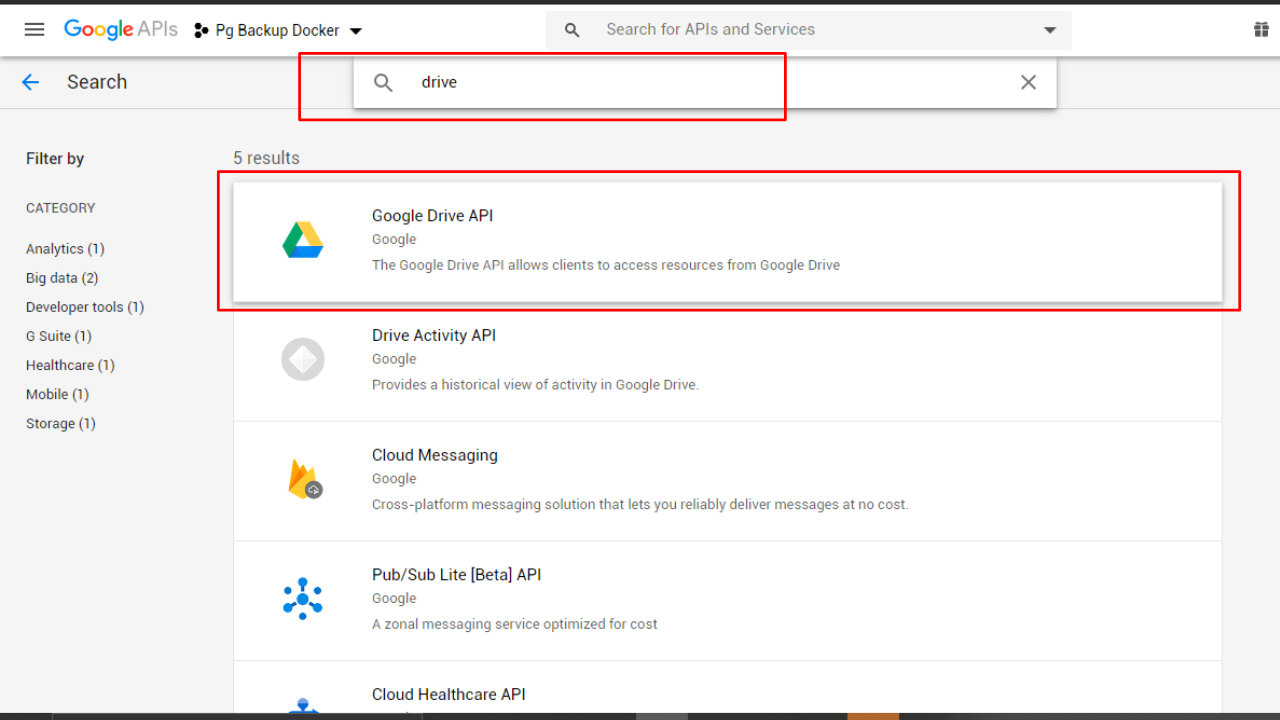

✔️ Step two: enable drive apis

Search drive to filter the apis and services so you can easily spot Google Drive

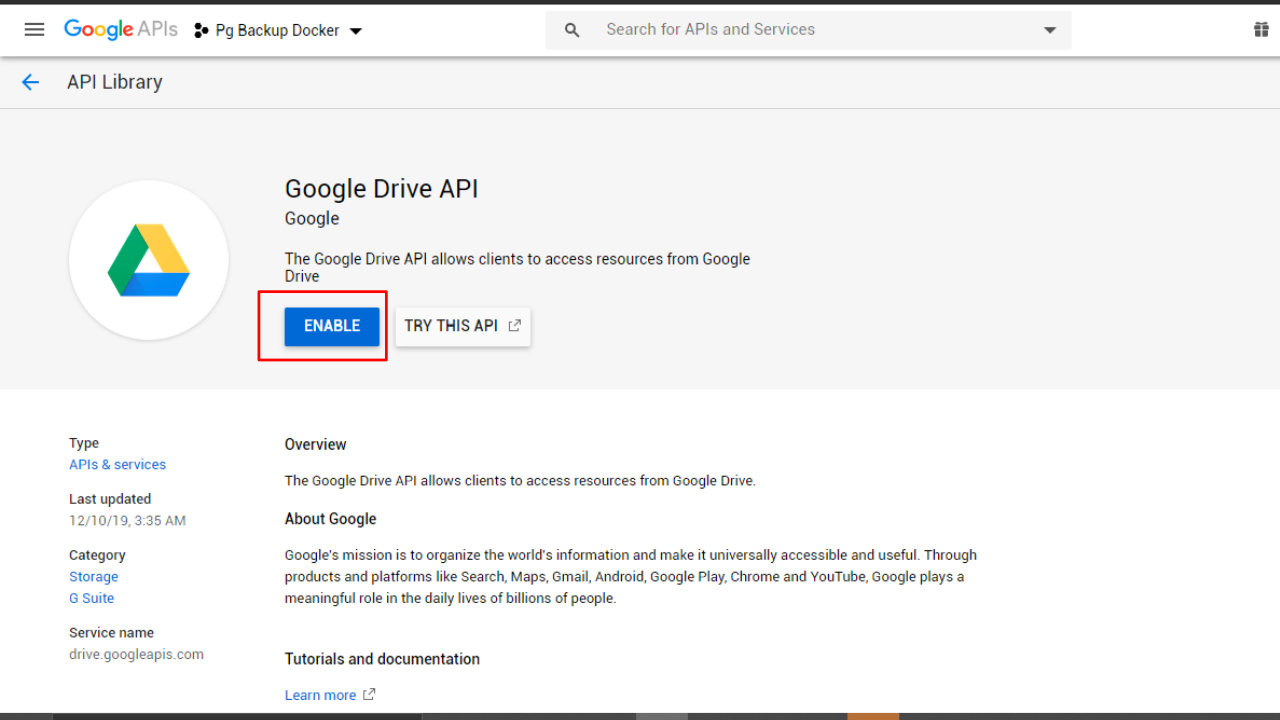

Enable Google Drive

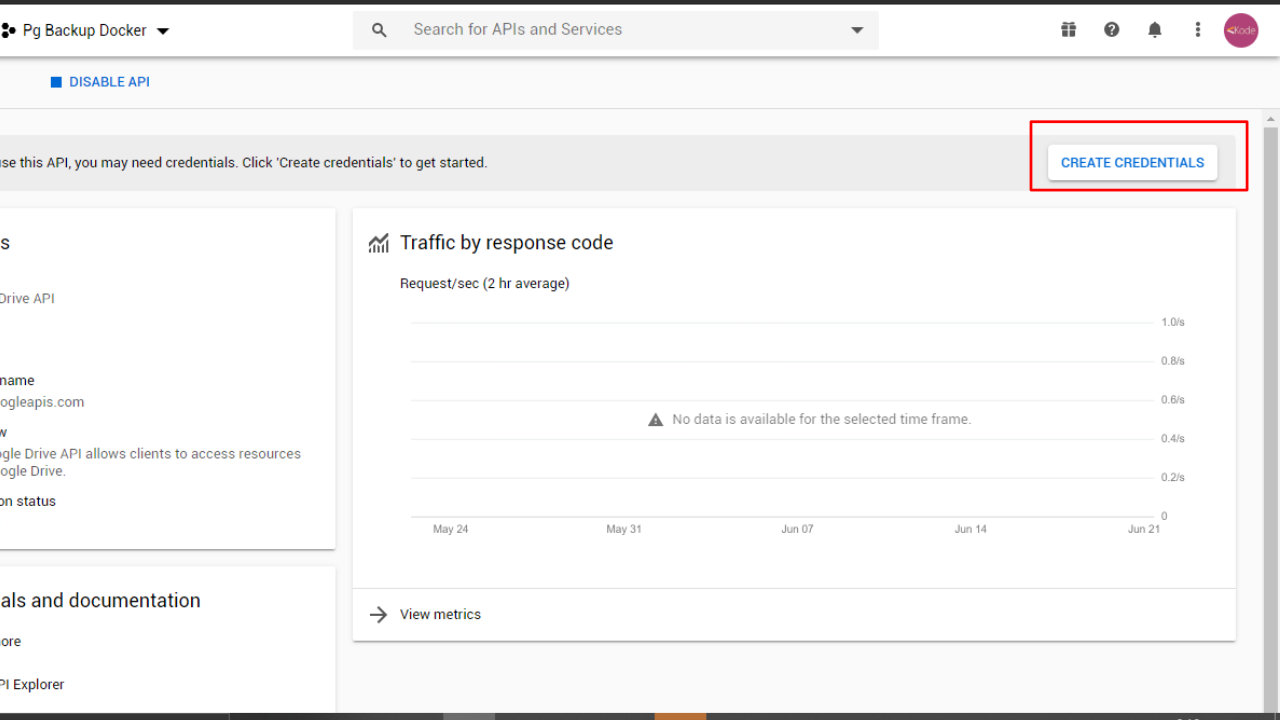

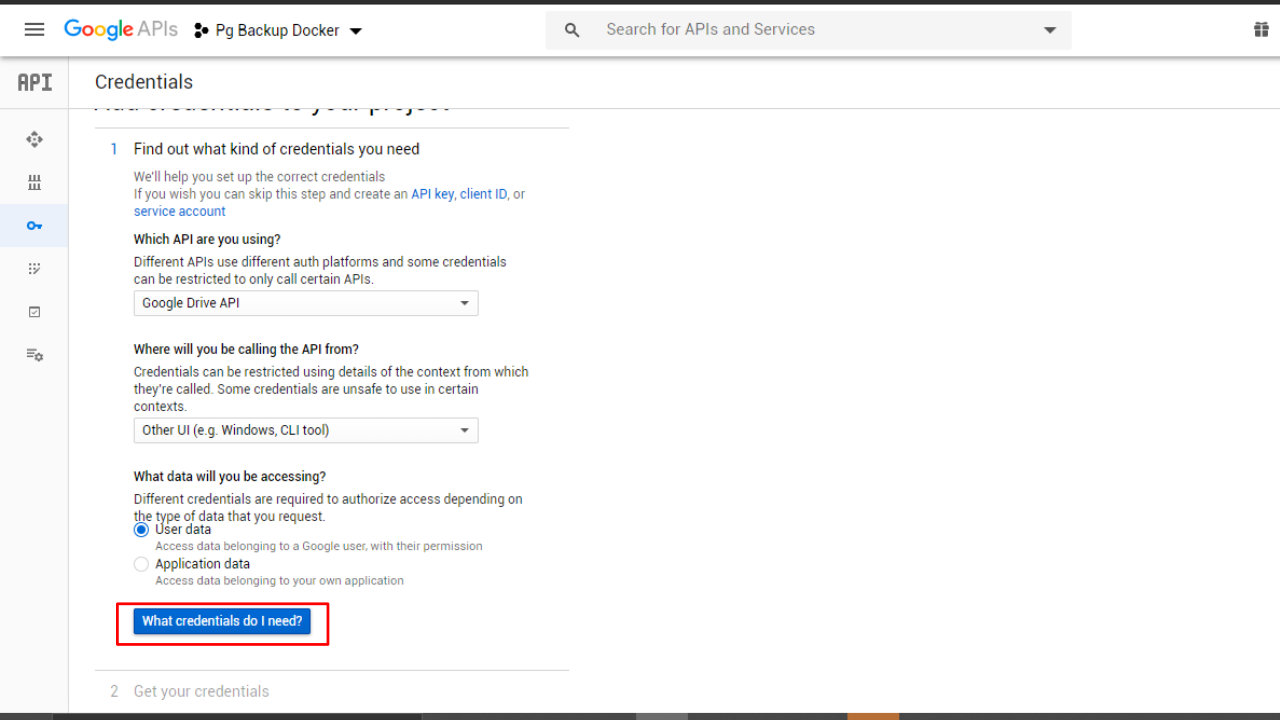

✔️ Step three: create credentials for a desktop app

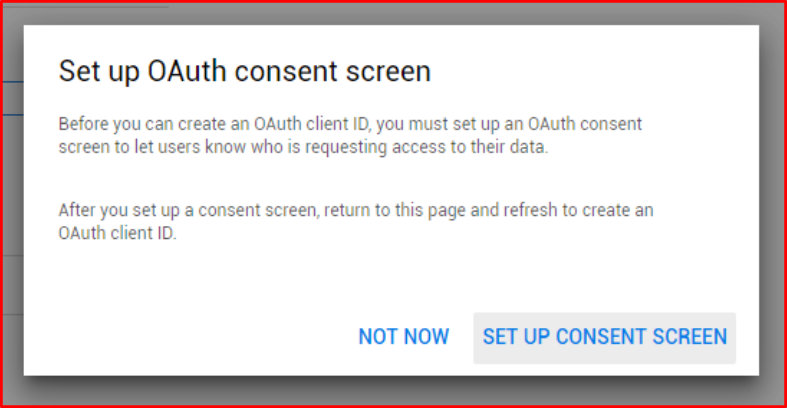

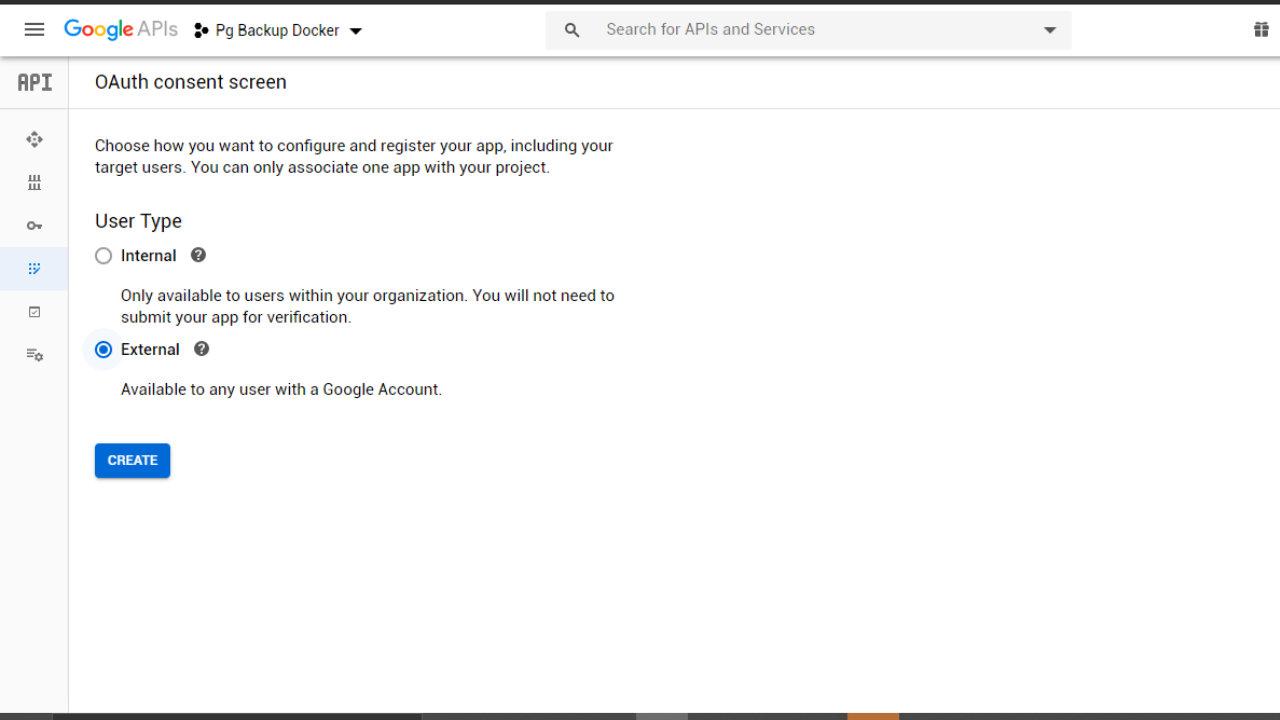

Create consent screen as directed by the popup

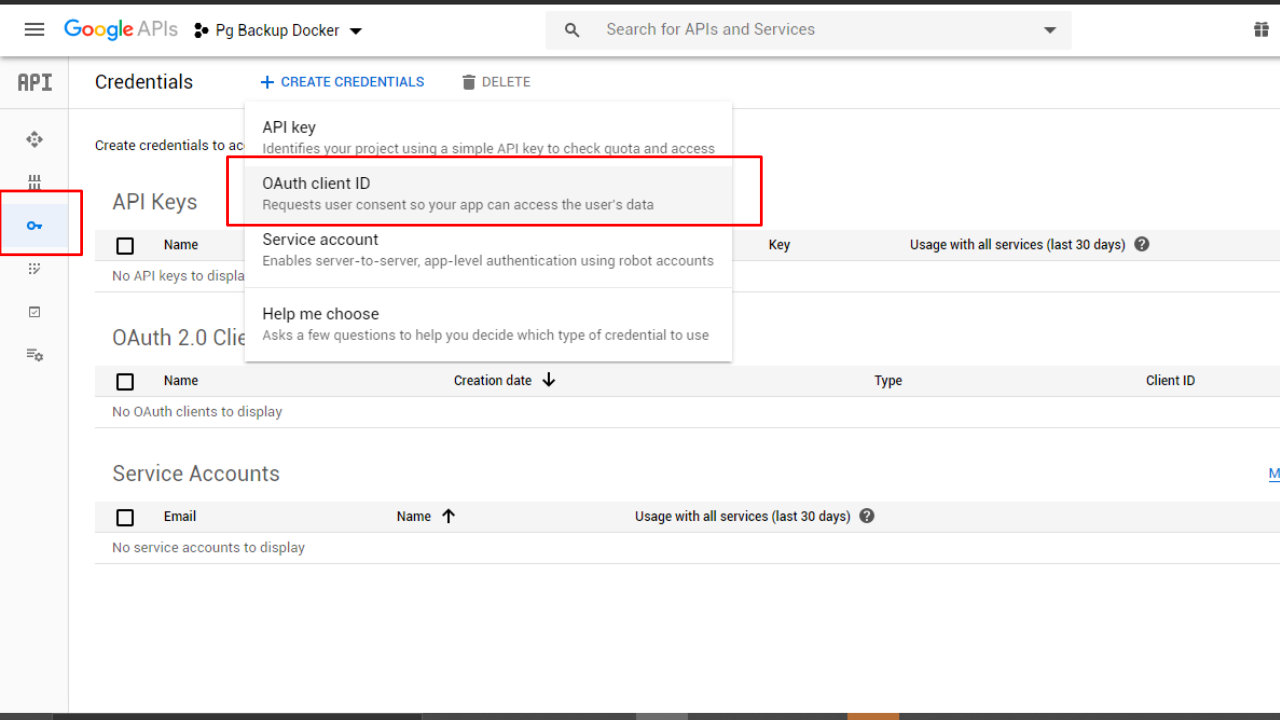

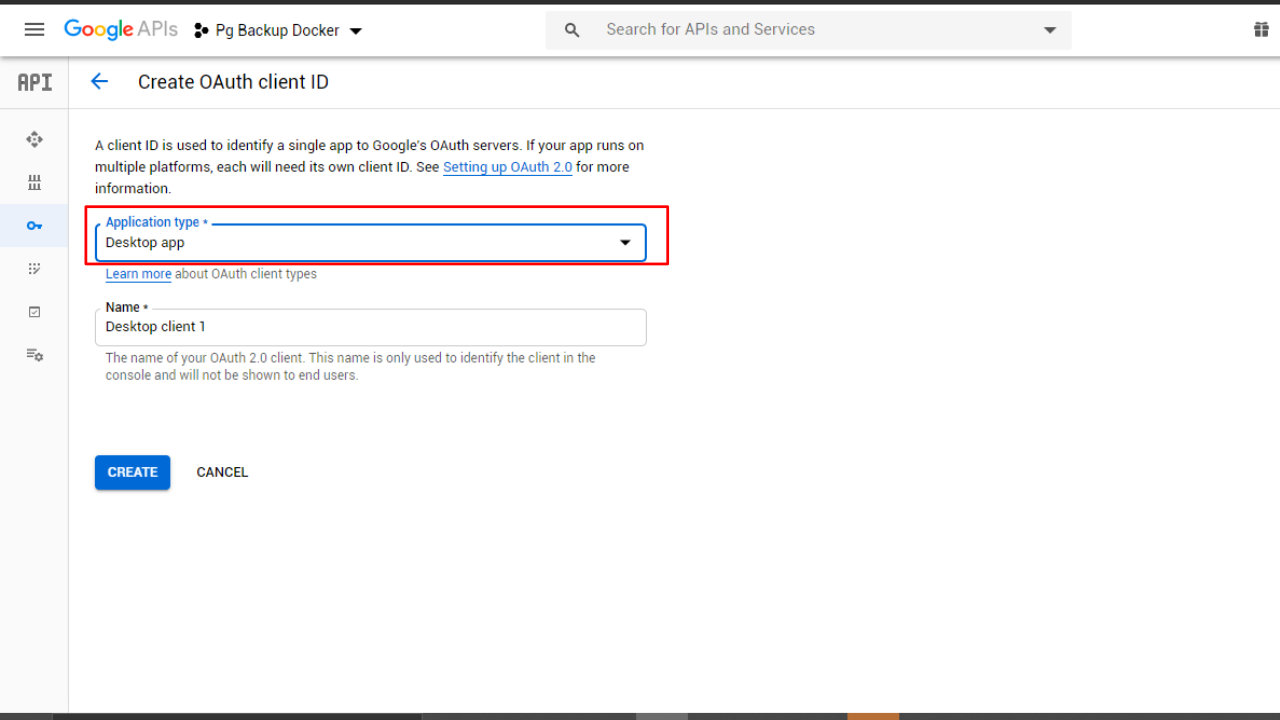

Then, go to credentials tab, choose create credentials again and choose desktop app:

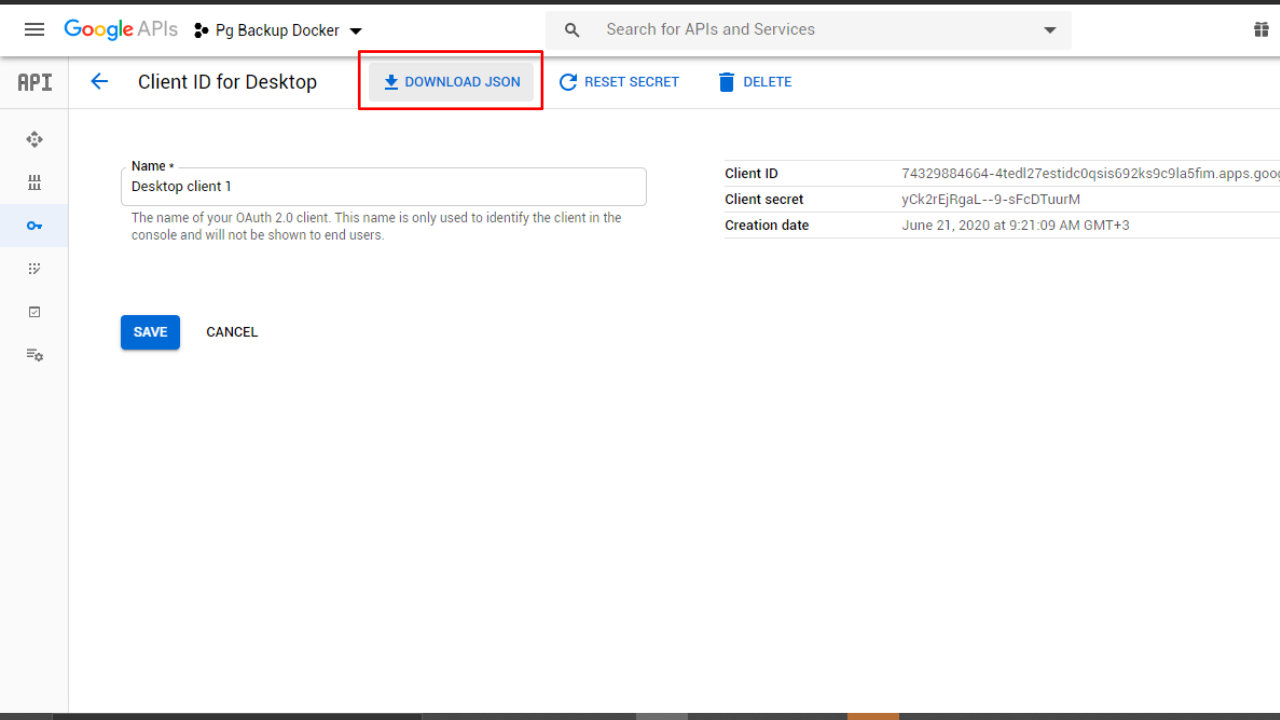

Finish and then back at the credentials tab, click the credentials you just created and then download json file with the credentials.

Your JSON should look something like this:

|

|

✔️ Step four: install gupload

Ssh into your server and run the following command:

bash <(curl --compressed -s https://raw.githubusercontent.com/labbots/google-drive-upload/master/install.sh)

Create a folder in your user home directory (~/) called backup:

cd ~ && mkdir backup

Run gupload for the first time:

gupload backup

When prompted, provide the credentials (CLIENT ID and CLIENT SECRET) from the JSON file you downloaded.

When asked for a refresh token, press enter. You will see a link in the terminal. Copy-paste the link into the browser, allow access to your google account and then copy the token you get and paste back into the ssh terminal. When it asks you for the root folder, just press enter.

If you somehow enter the wrong credentials, you will have to start again. To do that, first delete gupload’s config file which is at ${HOME}/.googledrive.conf. Then run gupload backup again.

If everything went well, you should see a folder named backup in your Google Drive.

If you need more specific instructions, the official repository for a bash script to upload to Google Drive will help.

✔️ Step five: create a bash script and a cron job

The cron job is going to help you run a backup of your postgres database at certain intervals. In my case, I wanted it to run daily at midnight.

The cron job we need runs a bash script. Save the following script in a file named backup_to_drive.sh:

#!/bin/bash

docker-compose exec -t postgres_container_name pg_dumpall -c -U user | gzip > ~/backup/dump_`date +%d-%m-%Y"_"%H_%M_%S`.gz

gupload backup -d

The first line executes a command inside a postgres docker container. The command dumps the whole database. The -U flag allows you to specify the database user.

The result of the pg_dumpall command is then piped to the gzip utility, which compresses the data and saves it in the backup folder in the user’s root directory.

Recall that we created the backup folder before. That was important, otherwise, this command will complain that the directory or file could not be found.

Replace postgres_container_name with the actual container in your docke-compose config name and user with your actual database user. I use the user with the highest privileges for effective backup.

To get the container ID, just run

docker psand locate the ID from the listed containers. I am sure you are using a distinct identifier for each container. Docker compose does it nicely.

The second line invokes the gupload command to upload the backup folder with a -d flag. The flag tells gupload to exclude any file if it already exists on Google Drive. This is important since we want to upload new backup files, not everything in the folder, each time the script runs.

This is the cron job that will run every midnight:

0 0 * * * bash ~/backup_to_drive.sh

To set the above cron job, just type crontab -e and add it as the last line.

You are all set! 🚀 🚀

If you have any questions, leave them below.

I run two posrgres databases in production. One is connected to a Django app and runs on docker, the other one powers Node.js server. Fun fact, I once was locked away from my own server due to bad config. So, having a copy of data is handy. Read on.

I run two posrgres databases in production. One is connected to a Django app and runs on docker, the other one powers Node.js server. Fun fact, I once was locked away from my own server due to bad config. So, having a copy of data is handy. Read on.